Early Audiometry

Before we had sophisticated audiometers and standardized test batteries, tuning forks were the first tools used to obtain information on an individual’s hearing acuity. In the late 19th century, tuning fork tests were developed based on the auditory phenomenon we are familiar with today. The most notable tuning fork hearing test, the Weber test was developed in 1845 by Ernst Weber and the Rinne test was developed in 1855 by Heinrich Rinne. Additionally, Marie Gelle developed a tuning fork test for stapes fixation in 1881. These early contributions are still widely used today. The Weber and Rinne tests are still widely utilized in audiology and ENT clinics and Marie Gelle’s method was the predecessor for modern-day tympanometry.

What we now classify as pure tone audiometry for finding hearing thresholds was first described by Gustav Theodor Fechner in the 19th century, who described the method of limits, the method of constant stimuli, and the method of adjustment. The method of limits and the method of adjustment were heavily utilized in the development of the modern-day threshold search for pure tone audiometry.

The first audiogram was developed in 1885 by Arthur Hartmann, who plotted left and right tuning fork responses and percentage of hearing on what he called an “Auditory Chart.” The first-time hearing thresholds were described in terms of frequency was in 1903 on Max Wein’s "Sensitivity Curve".

The Audiometer

Although quite different to modern-day audiometers, the first was introduced in 1899 by Carl C. Seashore, who sought to measure the “keenness of hearing.” This instrument ran on a battery and presented a tone or click.

Cordia C. Bunch was one of the first to study hearing acuity. He developed the “pitch range audiometer” in 1919. This device produced pure tones ranging from 30 – 10,000 Hz. Bunch used this tool to graph what he referred to as “hearing fields.” This graph documented patients the full spectrum of hearing – from thresholds to what we now call uncomfortable loudness levels (UCL). Bunch was the pioneer for many modern audiometric ideas and published a myriad of findings and theories from 1919-1943.

The first widely used commercial audiometer was developed in 1923 by Harvey Fletcher and R.L. Wegel. This device was called the Western Electric 2-A. This device was rather limited and could only present 8 frequencies via air conduction. Fletcher was also instrumental in promoting speech audiometry and developed a method in 1929.

Other companies began to release similar devices throughout the 1920s and 30s. These devices were used solely by otolaryngologists, but their use began to decline as it became apparent that a standardized calibration method was needed. Initially, each device was calibrated in a completely unique way. Calibration was often based upon a handful of lab technicians at each individual factory. As a result, patients could present with completely different results between audiometers.

In 1947, George Von Bekesy developed self-recording audiometry based on Fechner’s method of adjustment. Bekesy invented a fully automated audiometer that used motors to adjust intensity and frequency. Patients tracked their own thresholds by holding a button, triggering a switch, when a tone was no longer detected, and releasing the button when a tone was heard. This is known as Bekesy audiometry.

Audiometry and Threshold Search

Playing on Fechner’s method of limits, Bunch developed an early threshold search technique in 1943. In order to test hearing acuity, Bunch would first play a continuous tone at a suprathreshold level, to which the patient would respond. He would then turn off the tone, decrease intensity, and resume the continuous tone at this lower level. This process would be repeated until the patient no longer responded. At this point, the process would be repeated in the ascending direction. Threshold was defined as the midway point between the average threshold of the descending runs and the average threshold of the ascending runs.

Drawing on Bunch’s technique as well as early observations from clinicians that patients responded easier if tones were presented in the background of silence rather than continuously, Walter Hughson and Harold Westlake published a paper in 1944 recommending the ascending method of threshold search where the threshold is defined as the lowest level at which a response is obtained in 2 out of 3 ascending runs. The Hughson-Westlake method described in the paper was further refined by Carhart and Jerger in the 1950s who added the theory that tones should be presented in 5 dB steps. This technique is still used today and remains widely unchanged.

Calibration and Standards

In response to the unstandardized calibration of audiometers, the United States Public Health Service (USPHS), decided to study the hearing acuity of the US population in 1935. Headed by Willis Beasley, this became known as the “Beasley Survey” and the results provided the first data regarding the average normal hearing thresholds, in SPL, from 128 – 8192 Hz. Despite the great need for a standard calibration, and the important data gleamed by the Beasley Survey, a standard audiometer calibration was not published until 1951. It was at this time that the American Standards Association (ASA), a completely volunteer-based organization consisting of manufacturers, consumers, and engineers, among others, published the normal hearing threshold in SPL at each frequency. This was the 0 dB threshold line that we are all familiar with now.

Since no other data like this existed prior, the standard - called the ASA-1951- gained traction globally. Soon after, other countries like the UK and Japan completed their own version of the Beasley survey. After years of global collaboration, an international standard was released in 1964 – the ISO-64. This standard, like the ASA-1951, established a zero line by which all audiometers should be calibrated.

Like with any other big change, a transition period ensued. Changing the zero line by 10 dB had impacted not only patient care but military and veteran affairs as compensation was based upon hearing thresholds. Due to this, when reporting hearing loss, providers also had to report which standard they were using – the ASA-1951 or the ISO-64. This transition period was resolved in 1969 when ASA, whose name at this time changed to the American National Standards Institute (ANSI) refined the ISO-64 and released a new standard. This is the ANSI-69 which formed the basis for the standard still used in the modern day, the ANSI-96.

Say Goodbye to Calibration Downtime with Auditdata's SWAP Service

The magic is in its simplicity. Simply order a new set of refurbished transducers from Auditdata’s Calibration Swap Service before your calibration date is due. When they arrive, plug them in, and you are ready for your next test. Then, send the old ones back to us in the box. No downtime, no engineers and you can invest your budget in something more pressing than redundant equipment.

The Audiogram

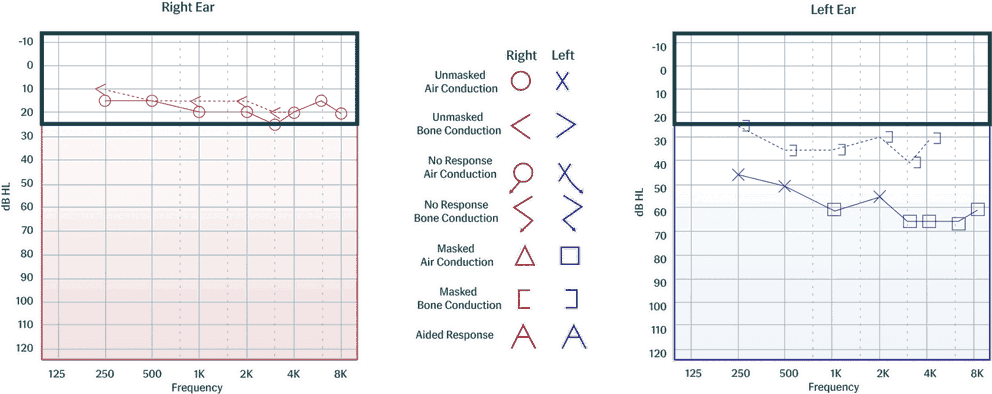

The first modern audiogram was developed in 1922 by Fletcher and Wegel, the individuals responsible for the first true audiometer, alongside the prominent otolaryngologist Dr. Edmund Prince Fowler. The team decided that hearing loss relative to the zero-hearing threshold line would be plotted downward on a graph by frequency of pure tone. This was the first time the graphical representation of hearing was presented using a logarithmic scale.

Present Day

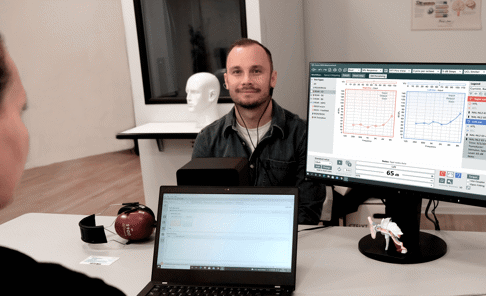

Audiometry has come a long way since its early beginnings, and the evolution of audiometers has played a crucial role in this progress. PC-based are now an essential tool in the diagnosis and management of hearing loss and are used in a variety of settings, including hospitals, clinics, and schools. Audiometers have become increasingly sophisticated and now can quickly and accurately produce a wide range of frequencies and intensities, as well as speech stimuli. In addition, modern audiometers often have advanced features such as internet connectivity and computerized testing, which allow for more precision and efficiency. With a wide range of features like personalized workflows and “nudging,” or reminders during testing – the Measure family of audiometers are ahead of the curve. These features not only help save clinic time but drive a better experience for your patients.

With precise testing comes precise hearing aid fittings. As fittings rely heavily on audiometry, advancements in audiometers have allowed for advancements in hearing aid fittings. For example, PC-based audiometers like the Measure - Diagnostic Audiometer & Fitting Unit allow you to perform Real Ear Measures (REM) to ensure hearing aids are optimally fit.

As technology continues to advance, it is likely that audiometers will become even more sophisticated and capable of providing more detailed and accurate hearing assessments. The Measure family of software and equipment remains at the forefront of these advancements, however, from the influence of Bekesy, Hughson, and Westlake on the integrated Auto-Hughson Westlake method to the standards set by the Beasley survey, the history of audiometry is not forgotten.

-

Over time, many individuals developed instruments designed to measure hearing acuity. While one of the first devices used to measure the “keenness of hearing” was developed in 1899 by Carl C. Seashore, it was quite different from what we consider an “audiometer” today. Even earlier still was the 1879 model developed by David Edward Hughes. This model was developed based on telephone technology, first introduced by Alexander Graham Bell. It was however, largely rejected by practitioners and not widely utilized. Thus, while not the first measure of hearing, one can designate the Western Electric 2-A audiometer, developed in 1923 by Harvey Fletcher and R.L. Wegel, as the first commercial used, modern-day audiometer.

-

In the 19th century, providers primarily utilized tuning fork tests to establish a patient’s hearing loss type and degree. The earliest mention of an electric device used to measure hearing acuity was in 1879, when David Edward Hughes adapted telephone technology. This device, however, was not widely accepted and is quite different from the modern-day audiometer. The first commercially accepted audiometer was developed in 1923 by Harvey Fletcher and R.L. Wegel.

-

The first graphical representation of hearing loss was created in 1885 by Arthur Hartmann, who plotted left and right tuning fork responses and percentage of hearing on what he called an “Auditory Chart.” Later, Max Wein developed the "Sensitivity Curve" in 1903 which described hearing thresholds in terms of frequency. The audiogram used today was first introduced in 1922 by Fletcher and Wegel who introduced not only the logarithmic scale to plot hearing thresholds, but the placement of frequency on the abscissa and intensity along the ordinate.

Other Blogs You Might Enjoy:

Mastering Cookie Bite Hearing Loss: Top Practices for Patient Success

Understanding cookie bite hearing loss and best treatment practices in order to optimize client success.

Common Audiometry and Hearing Loss Myths

Hearing loss is a prevalent condition that can significantly impact an individual's quality of life, yet misconceptions surrounding audiometry tests and hearing loss persist.

Are Real Ear Measurements Necessary?

Real Ear Measurements (REM) – also called Probe Microphone Measurements (PMM) – are considered the gold standard in hearing aid fitting and verification, allowing audiologists to determine whether a hearing aid user is receiving the precise level of amplification needed at every frequency to maximize their hearing.

Don't Miss Out On the Latest Insights On Audiology

Sign up today to receive exciting updates, tips, and the latest newsletters from Auditdata.